Saturday, November 29, 2008

Saturday, November 22, 2008

3D Boid Flock Video

I made a new video with our SwarmVis tool. This flocking is created with traditional Reynolds boid rules. Check it out!

A higher resolution version of this 3d flocking is available (8.2mb quicktime).

Tags:

Swarms

Wednesday, November 19, 2008

ORTS Game AI Competition

Recently I came upon the ORTS RTS Game AI Competition which is an AI competition that is ran with ORTS, an open RTS engine.

The openness of the engine allows for several different sub-competitions within the competition. These include collaborate path-finding, strategic combat (bases and tanks), complete RTS games (buildings, resources, fog of war, units, etc.) and tactical combat (20 tanks, 50 marines, flat terrain).

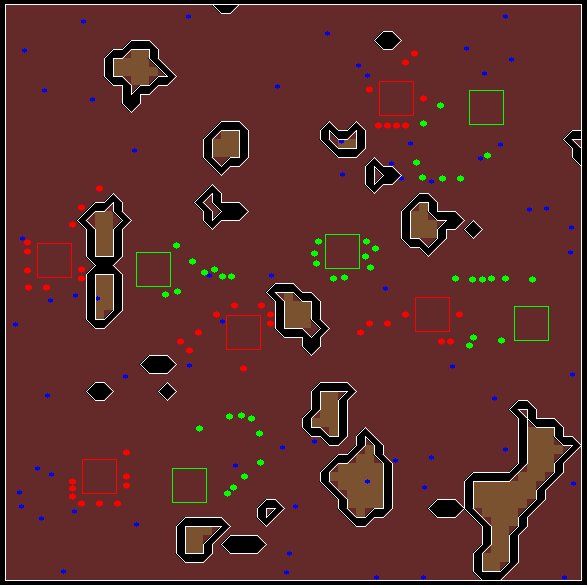

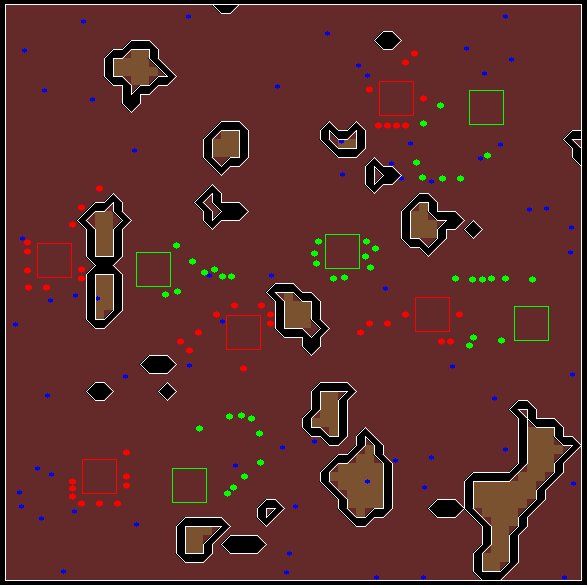

As an added bonus, the games can be viewed with in 3D, 2D or simply just have it spit out text (images taken from the ORTS website):

I really wish I had time to work on this domain. I think it has a lot of interesting properties. All algorithms have to be robust (i.e., not dependent on individuals) a-la swarm system. The competitiveness introduces another dimension; it's not just search. There is plenty of enemy modeling and decision making that can be done. Plus, there are tons of learning that could go on. I'm sure some virtual creatures type research could be applied to build strategies. Also, I could imagine interesting research in playing 2v2 games, where agents have to pass messages and make joint plans. I need to find someone who is interested in working on this so that I can live vicariously through their work.

Here are some videos from YouTube, showing the action.

100 marine vs. 100 marine skirmish

Full 1v1 game

The openness of the engine allows for several different sub-competitions within the competition. These include collaborate path-finding, strategic combat (bases and tanks), complete RTS games (buildings, resources, fog of war, units, etc.) and tactical combat (20 tanks, 50 marines, flat terrain).

As an added bonus, the games can be viewed with in 3D, 2D or simply just have it spit out text (images taken from the ORTS website):

I really wish I had time to work on this domain. I think it has a lot of interesting properties. All algorithms have to be robust (i.e., not dependent on individuals) a-la swarm system. The competitiveness introduces another dimension; it's not just search. There is plenty of enemy modeling and decision making that can be done. Plus, there are tons of learning that could go on. I'm sure some virtual creatures type research could be applied to build strategies. Also, I could imagine interesting research in playing 2v2 games, where agents have to pass messages and make joint plans. I need to find someone who is interested in working on this so that I can live vicariously through their work.

Here are some videos from YouTube, showing the action.

100 marine vs. 100 marine skirmish

Full 1v1 game

Monday, November 17, 2008

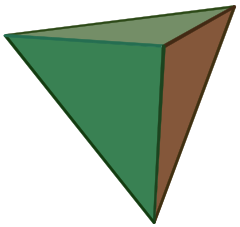

Tetrahedron Swarm

In my data visualization class, my group partner Niels Kasch and I have been working on a swarm visualization program (Niels has done most of the programming). It's not quite done yet, so a description of the software will come at a later date. I did however, want to show off one of my favorite domains: the three-dimensional tetrahedron swarm.

A high quality video of the tetrahedra (6.3mb quicktime) is available.

Here are some screenshots:

The shape is formed by agents adhering to the following procedure.

First, four corners are selected by the swarm via a simple election algorithm.

The agents follow the following rules:

- If a corner, be attracted to the three other corners very slightly.

- If not a corner, be attracted to the two closest corners with great, but equal, strength. That is, the output force vector of this rule is the sum of two vectors of magnitude f. Note that when an agent is on the line formed by its two closest corners, the forces cancel each other out.

- Avoid any agents that get too close.

It is amazing that these simple rules result in such neat behavior. This is what made me interested in this topic.

Tags:

Swarms

Sunday, November 16, 2008

Hill-Climbing On Boid Swarm Configurations

I implemented a rather silly hill-climbing algorithm that I used on my boid density domain to find a configuration of boid parameters that will produce a flock of a desired density.

The algorithm is as follows:

- Generate a bunch of random points around the best solution in the boid 4-dimensional parameter space (number of agents, alignment factor, avoidance factor, cohesion factor)

- Check each one to see what the actual density is for that configuration

- If the density is closer to the goal density than the best solution, then this solution is the new best solution and go back to step 1

- If at any time a solution is found that is within the error bounds, stop

I made a video of this process (11MB).

This is pretty stupid. This will serve as a good starting point or framework for later more complex algorithms. I really need to use some kind of search with memory, so that I can predict where I should hill-climb next... or some kind of machine learning technique like k-nearest neighbor or kernel density estimation. Also, I hope to have some more search algorithms, such as simulated annealing, applied to this problem. At least now I have a nice little framework going so plugging in new algorithms should be rather simple.

Refactored My Swarn Source Code

Tonight I spent a significant amount of time putting my boid density domain together into coherent python script. I figure since I'll be having some people working with me now, I shouldn't put them through the same pain I go through whenever trying to integrate my scripts with bigger things. With this new script, all you have to do is call the run_boids and it spits out the density that it got.

Also, I made a pretty bad ass python vector class. I basically wanted a tuple where tup1 + tup2 is the mathematical sum, not the concatenation. Honestly, this was the first time I have used inheritance in python and I must say I wasn't disappointing. Plus, I heavily overloaded operators to make the coding easier. I'm probably more excited about this than I should be. It really will make my coding easier, though.

Also, I made a pretty bad ass python vector class. I basically wanted a tuple where tup1 + tup2 is the mathematical sum, not the concatenation. Honestly, this was the first time I have used inheritance in python and I must say I wasn't disappointing. Plus, I heavily overloaded operators to make the coding easier. I'm probably more excited about this than I should be. It really will make my coding easier, though.

Thursday, November 13, 2008

Project now on Google Code

I was introduced to using Google Code recently by my fellow graduate student Niels. Before, I didn't know how awesome it was... but now I know. I had always looked through it and knew that open source projects were hosted there but I never knew how easy it was to make your own project and interface svn with it. I am excited about being able to continuously back up my work as I make changes to it. The source browsing feature is absolutely amazing. The other features such as the wiki I may never use, but I appreciate them.

My new Google Code project: minerswarm

I added pretty much all code (python scripts mostly), data (results, swarm position data) and papers (LaTeX source, PDFs, images, etc.) that have to do with my dissertation research.

Tags:

meta-work

AGI '09 Paper: "Understanding the Brain's Emergent Properties"

I just recently submitted a paper to The Second Conference on Artificial General Intelligence. The paper is a position statement describes how our work in swarm-level interactive control could be applied to building artificial general intelligence. This paper was done in collaboration with Marc Pickett and Dr. Marie desJardins.

To read more about rule abstraction, check out our AAMAS '09 paper that is currently still under review.

Title: Understanding the Brain's Emergent Properties

Abstract:

In this paper, we discuss the possibility of applying rule abstraction, a method designed to understand emergent systems, to the physiology of the brain. Rule abstraction reduces complex systems into simpler subsystems, each of which are then understood in terms of their respective subsystems. This process aids in the understanding of complex systems and how behavior emerges from the low-level interactions. We believe that this technique can be applied to the brain in order to understand the mind and its essential cognitive phenomena. Once a sufficient model of the brain and mind is created, our framework could then be used to build artificial general intelligence that is based on human intelligence.

Link: Understanding the Brain's Emergent Properties by Don Miner, Marc Pickett, and Marie desJardins

To read more about rule abstraction, check out our AAMAS '09 paper that is currently still under review.

Title: Understanding the Brain's Emergent Properties

Abstract:

In this paper, we discuss the possibility of applying rule abstraction, a method designed to understand emergent systems, to the physiology of the brain. Rule abstraction reduces complex systems into simpler subsystems, each of which are then understood in terms of their respective subsystems. This process aids in the understanding of complex systems and how behavior emerges from the low-level interactions. We believe that this technique can be applied to the brain in order to understand the mind and its essential cognitive phenomena. Once a sufficient model of the brain and mind is created, our framework could then be used to build artificial general intelligence that is based on human intelligence.

Link: Understanding the Brain's Emergent Properties by Don Miner, Marc Pickett, and Marie desJardins

Saturday, November 1, 2008

Campaign Advertising and The Dollar Auction

In my opinion, campaign financing (mostly fund raising) is out of control. According to CNN, Obama has raised 450 million USD and McCain has raised 230 million USD (10/1/08). This really isn't that much money when looked at in the grand scheme of US politics, but to me it seems like preventable a waste.

Both campaigns are forced to spend money on advertising, while also try to one-up the other. This reminds me of the Dollar Auction in which someone auctions off one dollar. The catch is both the top-bidder and the second place bidder have to pay up. Lets say that election is worth $1. McCain bids $.98. Obama bids $.99, McCain bids $1. At this point, if Obama doesn't bid $1.01, he will be out $.99. Therefore, he is committed and places the bid. This goes out of control and both parties keep placing bids so that they don't lose all their money. The loser of the 2008 election will have wasted all of his money on advertising. Of course this doesn't translate one-to-one to today's campaign, but I think the parallels are easy to see. Campaign spending on advertising is going crazy and unfortunately, one party's money spent on advertising will be completely wasted in vain. Couldn't this money be spent better somewhere else?

The dollar auction is good for one person only: the seller. So, who is the seller in this situation? The media. They are reaping in crazy amounts of money that campaigns are pouring into advertising.

What can be done? I am not quite sure. Not that this is a necessarily good idea, but perhaps some sort of regulation could be set into place, limiting the amount of money that can be spent on advertising every month. Should the winner of the campaign be affected by how much money they have raised, even if the money is from every-day Americans? Even cigarette companies supported legislation banning advertising, knowing that spending was out of control and they could increase profits by not advertising at all.

Both campaigns are forced to spend money on advertising, while also try to one-up the other. This reminds me of the Dollar Auction in which someone auctions off one dollar. The catch is both the top-bidder and the second place bidder have to pay up. Lets say that election is worth $1. McCain bids $.98. Obama bids $.99, McCain bids $1. At this point, if Obama doesn't bid $1.01, he will be out $.99. Therefore, he is committed and places the bid. This goes out of control and both parties keep placing bids so that they don't lose all their money. The loser of the 2008 election will have wasted all of his money on advertising. Of course this doesn't translate one-to-one to today's campaign, but I think the parallels are easy to see. Campaign spending on advertising is going crazy and unfortunately, one party's money spent on advertising will be completely wasted in vain. Couldn't this money be spent better somewhere else?

The dollar auction is good for one person only: the seller. So, who is the seller in this situation? The media. They are reaping in crazy amounts of money that campaigns are pouring into advertising.

What can be done? I am not quite sure. Not that this is a necessarily good idea, but perhaps some sort of regulation could be set into place, limiting the amount of money that can be spent on advertising every month. Should the winner of the campaign be affected by how much money they have raised, even if the money is from every-day Americans? Even cigarette companies supported legislation banning advertising, knowing that spending was out of control and they could increase profits by not advertising at all.

Approaches to Artificial General Intelligence

This past Friday, I hosted Ben Goertzel as a colloquium speaker here at UMBC. Dr. Goertzel is a strong proponent for the development and importance of artificial general intelligence (AGI). He is one of the organizers of the AGI conference, CEO of Novamente and is working on the OpenCog project. At the talk, he discussed some background information on AGI and gave a high-level overview of OpenCog. I found his presentation as interesting as the talks he gave at last year's AGI conference.

There was one slide in which Dr. Goertzel discussed what kind of system will give rise to true AGI.

Dr. Goertzel mentioned a few approaches (admitting it is not all possibilities) to AGI, two of which I will contrast: (1) some sort of emergent system, accurate neural model of the brain, or a general theoretical perspective, and (2) an architecture or framework that pieces together different parts of computer science and artificial intelligence. I believe that (1) is not possible right now, due to lack of understanding on how the brain is organized and how the mind works. Path (2), on the other hand, may be able to create AGI relatively soon, but nobody is really sure. All we can do is make attempts at it and see what happens. Current architectures include OpenCog, SOAR, and LIDA. The more "single-theory" (a term I just invented) systems believe that the mind is organized in a particular simple way. Two examples of these (in my opinion) are Subsumption and the Memory-Prediction Framework.

Both general approaches may give rise to AGI eventually. However, I find it hard to believe that any of the current architectures will scale to Strong-AI eventually. They seem to be as limited as the AI techniques they use. Even if using lots of AI techniques together may make the overall system better, it still seems limited. Major advancements in this approach will be made by stronger AI techniques over time.

On the other hand, I think that the single-theory approach is far more robust. The downside is these current theories are just theories without much scientific backing. Some usually-intelligent fellow comes up with with a system that would seem to make intelligence. For example, On Intelligence makes plenty of sense but not amazingly hasn't given rise to Strong-AI. I personally enjoy biologically inspired approaches to AI. I think that using the brain as a starting point for figuring out to make intelligence is a decent strategy. As neuroscience advances we get closer to human-level AI. I would like to believe that the brain's organization is made out of a bunch of hierarchical fractals and patterns from which emerges what we call intelligence. Much like when first learning recursion: figuring out the recursive function is a difficult cognitive task, but once it is found it is elegant, short and easy to understand. I seriously doubt that our genetic code includes a 1:1 blueprint of our brain. I'm sure our genetic code is storing some sort of recursive production rule that creates out brain. These simple-theory methods aren't quite possible right now, but may be in the near future. Also, I believe a simple-theory approach will be more robust and scalable (much like nature) than the frameworks of today.

There was one slide in which Dr. Goertzel discussed what kind of system will give rise to true AGI.

Dr. Goertzel mentioned a few approaches (admitting it is not all possibilities) to AGI, two of which I will contrast: (1) some sort of emergent system, accurate neural model of the brain, or a general theoretical perspective, and (2) an architecture or framework that pieces together different parts of computer science and artificial intelligence. I believe that (1) is not possible right now, due to lack of understanding on how the brain is organized and how the mind works. Path (2), on the other hand, may be able to create AGI relatively soon, but nobody is really sure. All we can do is make attempts at it and see what happens. Current architectures include OpenCog, SOAR, and LIDA. The more "single-theory" (a term I just invented) systems believe that the mind is organized in a particular simple way. Two examples of these (in my opinion) are Subsumption and the Memory-Prediction Framework.

Both general approaches may give rise to AGI eventually. However, I find it hard to believe that any of the current architectures will scale to Strong-AI eventually. They seem to be as limited as the AI techniques they use. Even if using lots of AI techniques together may make the overall system better, it still seems limited. Major advancements in this approach will be made by stronger AI techniques over time.

On the other hand, I think that the single-theory approach is far more robust. The downside is these current theories are just theories without much scientific backing. Some usually-intelligent fellow comes up with with a system that would seem to make intelligence. For example, On Intelligence makes plenty of sense but not amazingly hasn't given rise to Strong-AI. I personally enjoy biologically inspired approaches to AI. I think that using the brain as a starting point for figuring out to make intelligence is a decent strategy. As neuroscience advances we get closer to human-level AI. I would like to believe that the brain's organization is made out of a bunch of hierarchical fractals and patterns from which emerges what we call intelligence. Much like when first learning recursion: figuring out the recursive function is a difficult cognitive task, but once it is found it is elegant, short and easy to understand. I seriously doubt that our genetic code includes a 1:1 blueprint of our brain. I'm sure our genetic code is storing some sort of recursive production rule that creates out brain. These simple-theory methods aren't quite possible right now, but may be in the near future. Also, I believe a simple-theory approach will be more robust and scalable (much like nature) than the frameworks of today.

Thoughts on the Loebner Prize

The Loebner Prize is the first formal competition that implements the famous Turing Test, which asks "can a machine think?" Turing suggests that if the artificial intelligence agent can fool humans that it is actually human, the machine is thinking. If a machine is found to pass the test, it will win the Loebner Prize gold medal and 100,000 USD. Since machines are pretty far off from this goal, to stimulate interest, the Loebner prize awards a bronze medal every year along with 3000 USD to the agent "closest" to passing the Turing Test.

This year's winner is Elbot by Artificial Solutions, which you can chat with online. I am rather surprised by the fact that it makes grammatical sentences while incorporating semi-relevant information in its responses. I am going to guess that someone spent a really long time writing up rules for responses. Elbot's responses are a big improvement (depending on how you look at it) over ELIZA, which was made in 1966.

A lot of things are worth doing for money. For example, I would probably take a job doing data entry eight hours a day if I was getting paid 300,000 USD a year. Current contestants in the Loebner prize can only practically expect to win 3,000 USD, which is far less than the amount of work put into a lot of these bots. Therefore, in my opinion, there almost always has to be some kind of exterior funding to the production of these bots. There is no way any self-respecting AI researcher would spend his own time developing a chatbot like the ones in this year's competition.

The Loebner prize (and perhaps the Turing Test), at best, is a poorly defined fitness function that a population of chatbot developers are converging towards. Instead of using actual learning or reasoning, the developers of these hacks use shortcuts such as a massive amount of predefined rules, reorganizing input text, etc. The Loebner prize has created a local maxima that is difficult to get out of in the grand scheme of developing truly intelligent machines.

I think the main reason for so much hatred directed at the Loebner prize comes out of the fact that Turing's monumental article Computing Machinery and Intelligence has been twisted into some sideshow contest. Turing is one of the founders of computer science and AI, and many agree that the Loebner prize has very little to do with Turing and his vision. In my opinion, like many things like this, people should not read too literally into statements written by people in the naive past.

Something to realize, though, is that these chatbots do have some purpose in this world. Why else are they getting funding by businesses? They could help with automated telephone calls (not sure if this is a good thing or not), automated online technical support, seemingly intelligent conversations with non-player-characters in video games, etc. There is demand for applications in these domains and many people aren't willing to wait around for someone to create Strong AI. The Loebner prize's downfall is this rather small domain in AI has an unfortunate association with the founding ideas set forth through Turing, that many people respect.

There is one major reason I think the Loebner prize is hurtful to the AI community. Unfortunately, from first glance, chatbots may be the most interesting thing to ever come out of AI. The media and uninformed bloggers just can't help to over-sensationalize this contest. For example, the article titled "Loebner Prize Winner Announced; Is He Human?". NO. It is NOT human. What the hell is wrong with you? AI in general, and even more so chatbots are not anything close to actual intelligence. Do you consider a huge database of response rules intelligent? The article "University of Reading to host artificial intelligence battle royale" is more subtle in its small contribution to the distortion of the public perception of AI. The Loebner prize is NOT an AI contest. It is a chatbot contest. Would you say an ice sculpture contest is an "artistic battle royale"? NO. This makes people seem to think that myself and other AI researchers are sitting around working on chatbots. I guess this is a problem throughout the media with all domains, not only this one. Regardless, every time I read some article about how the Loebner prize might produce the next HAL9000 and may be the dawn of the real-world Matrix, I throw up a little bit in the back of my throat.

Oh, and please stop with the HAL9000 eye image that is usually placed along with these articles.

Tags:

AI

Sunday, October 26, 2008

October 2008 Research Map

Instead of writing out a text outline of what current problems remain in my dissertation research, I decided to make a hierarchical graph outlining open problems. I made the figure with OmniGraffle (lays out the hierarchy on its own).

I strongly suggest this method to people trying to map out their dissertation. I have found it easier to keep up to date after meetings and more intuitive to lay out. The only problem is that I can't place paragraph-length descriptions without making the graph cluttered. This results in my research map pretty much only useful to me because it only contains key words. Sorry.

Link: October 2008 Research Map

Tags:

meta-work

DARPA Grand Challenge Videos

I wanted to show a video to my undergraduate AI class about The DARPA Grand Challenge (a competition to design and build the best unmanned vehicles). After searching around, I quickly found "The Great Robot Race", a NOVA documentary on the 2006 DARPA Grand Challenge, that chronicles the stories of several competing teams. I thought the documentary was entertaining and offered great insight into the teams, how they are ran and the struggles they went through. Unfortunately, the AI that they did talk about was very brief and abstract; typical of a "mainstream" documentary.

In searching for supplemental material, I found a talk that Dr. Sebastian Thrun (Leader of the Stanford team that won the 2006 grand challenge) gave at Google, called "Winning the DARPA Grand Challenge." This talk, although similar in length to the NOVA documentary, gives great detail in the intelligent systems behind Stanley, the Stanford robot. As a result, this video is much more interesting from an artificial intelligence standpoint. After watching this talk, I decided I'll just show this to my class instead. Damn. I already paid $17 for the NOVA documentary on DVD.

On a side note, I don't know why some documentaries have to dumb the "meat" of the research so much. There was content covered in Thrun's talk that could easily be explained to a layman -- and wasn't. I wonder if I am incorrect in my faith for the common documentary viewer or the producers didn't feel like it was interesting. Who knows. I wonder how much interesting information I am missing when I watch documentaries outside of my area (E.g., ant documentaries).

Link: "The Great Robot Race" (NOVA)

Link: "Winning the DARPA Grand Challenge" (Dr. Sebastian Thrun)

New Swarm Videos

I added a few videos to my research site's video section. If you've never checked out my older videos, you should. They are probably more interesting than the ones talked about in this post.

First, there are videos of my Form Circle and Boid Density experiments, which are described in detail in my AAMAS '09 Paper: "Learning Abstract Properties of Swarm Systems" (currently under review). In addition, there is a video of a 3-dimensional boids domain I have been playing with.

I would like to mention that these videos were made by taking still image frames dumped by my simulations, then made into a video with the excellent program called FrameByFrame.

Form Circle

formcircle1.mov (3.6mb quicktime movie)

Boids in this demonstration self-organize into a circle. We varied the avoidance factor and the number of agents and ran several experiments to see what the radius would be. Our final goal was to create a mapping between the low-level parameters and the high-level property of radius.

Boid Density

boiddensity.mov (3.5mb quicktime movie)

Boids in this demonstration flock towards a goal (red dot). We record the average density on the way to the goal. Our final goal was similar to that of the form circle domain: creating a mapping between boid density and the low-level parameters.

3D Boids

boids1.mov (2.2mb quicktime movie)

Boids moving around in a 3 dimensional environment. Bigger boids are closer to the screen than smaller boids. Boids are dynamically color coded based on their flock. This program hasn't been integrated in my research yet.

Link: Don's Swarm Videos

First, there are videos of my Form Circle and Boid Density experiments, which are described in detail in my AAMAS '09 Paper: "Learning Abstract Properties of Swarm Systems" (currently under review). In addition, there is a video of a 3-dimensional boids domain I have been playing with.

I would like to mention that these videos were made by taking still image frames dumped by my simulations, then made into a video with the excellent program called FrameByFrame.

Form Circle

formcircle1.mov (3.6mb quicktime movie)

Boids in this demonstration self-organize into a circle. We varied the avoidance factor and the number of agents and ran several experiments to see what the radius would be. Our final goal was to create a mapping between the low-level parameters and the high-level property of radius.

Boid Density

boiddensity.mov (3.5mb quicktime movie)

Boids in this demonstration flock towards a goal (red dot). We record the average density on the way to the goal. Our final goal was similar to that of the form circle domain: creating a mapping between boid density and the low-level parameters.

3D Boids

boids1.mov (2.2mb quicktime movie)

Boids moving around in a 3 dimensional environment. Bigger boids are closer to the screen than smaller boids. Boids are dynamically color coded based on their flock. This program hasn't been integrated in my research yet.

Link: Don's Swarm Videos

Tags:

Swarms

AAMAS '09 Paper: "Learning Abstract Properties of Swarm Systems"

Update: This paper has been rejected :(. Time to address the reviewers' comments and get ready for IJCAI 09.

I just recently submitted a paper to the Eighth International Conference on Autonomous Agents and Multiagent Systems (AAMAS '09). The paper describes work done by myself and my adviser Dr. Marie desJardins and describes our current endeavors in swarm-level interactive control.

To make a long story short, this paper discusses the method of rule abstraction, which is used to define high-level properties of swarms that emerge out of low-level behaviors. We provide an example implementation of rule abstraction and apply it to two domains: agents that self-organize into circles (shown in the image in this post) and boid agents that flock.

Title: Learning Abstract Properties of Swarm Systems

Abstract:

Rule abstraction is an intuitive new tool that we propose for implementing and controlling swarm systems. The methods presented in this paper encourage a new paradigm for designing swarm applications: engineers can interact with a swarm at the abstract (swarm) level instead of the individual (agent) level. This is made possible by modeling and learning how particular abstract properties arise from low-level agent behaviors. In this paper, we present a procedure for building abstract properties and discuss how they can be used. We also provide experimental results showing that abstract rules can be learned through observation.

Link: Learning Abstract Properties of Swarm Systems by Don Miner and Marie desJardins

Python Tutorial

I use the Python programming language for the majority of my coding tasks these days. Note the title of this blog.

In Dr. Tim Finin's Principles of Programming Languages course (CMSC 331), I will be giving a guest lecture tutorial on Python. Most of these students have a semester or two of Java and C experience from introductory computer science classes, so I tried to keep it relatively simple.

Since I have this tutorial written up I will hopefully keep it updated as I come across interesting things. Just a few days ago I learned about the back-ticks around something to convert to a string. E.g.,

Link: "Brief Introduction to Python"

In Dr. Tim Finin's Principles of Programming Languages course (CMSC 331), I will be giving a guest lecture tutorial on Python. Most of these students have a semester or two of Java and C experience from introductory computer science classes, so I tried to keep it relatively simple.

Since I have this tutorial written up I will hopefully keep it updated as I come across interesting things. Just a few days ago I learned about the back-ticks around something to convert to a string. E.g.,

`12345`[2] returns '3'. I saw this in one of my student's code for one of their coding assignments; I should read more code other than my own.Link: "Brief Introduction to Python"

Tags:

Python

Introduction

This blog will serve mainly as a documentation tool for my academic endeavors (dissertation research, classwork, teaching, etc.). I often update my personal website with no way of saying what is new and interesting that is going on.

Therefore, this will be my "news" site, and my original website will continually serve as a reference.

Therefore, this will be my "news" site, and my original website will continually serve as a reference.

Tags:

meta-work

Subscribe to:

Posts (Atom)